Blog Post

Digital Technology Challenges Health Privacy

Study Reveals Critical Issues for Regulation

There are virtually no regulatory structures in the US to protect health privacy in the context of the digital health footprint.

That’s the blunt conclusion of a new analysis in JAMA Network Open by David Grande, Raina Merchant, David Asch, Carolyn Cannuscio, and colleagues. Based on interviews and surveys with 26 experts in telemedicine, law, data mining, marketing, health policy, computer science, ethics, cybersecurity, and machine learning/artificial intelligence, the authors describe challenges to health privacy inherent in how data scientists develop and commercialize personalized health data—our “digital health footprint.”

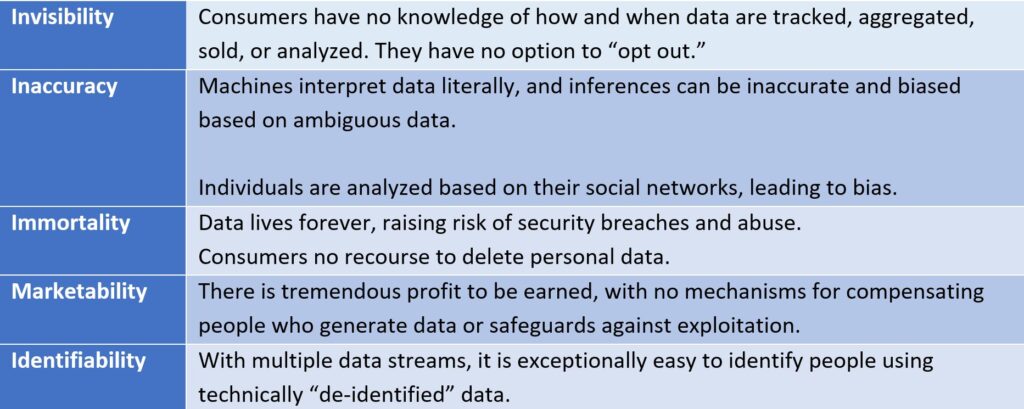

These challenges, listed below, reveal the weakness of the United States’ sector-specific approach, with differential protections conferred on health care encounter data through the Health Insurance Portability and Accountability Act (HIPAA), or in the case of genetic information, the Genetic Information Nondiscrimination Act (GINA). The crux of the problem is that the line between health and non-health data has blurred, with almost any data stream able to generate health insights. And as this digital health footprint emerges, privacy is largely unprotected.

What is a digital health footprint?

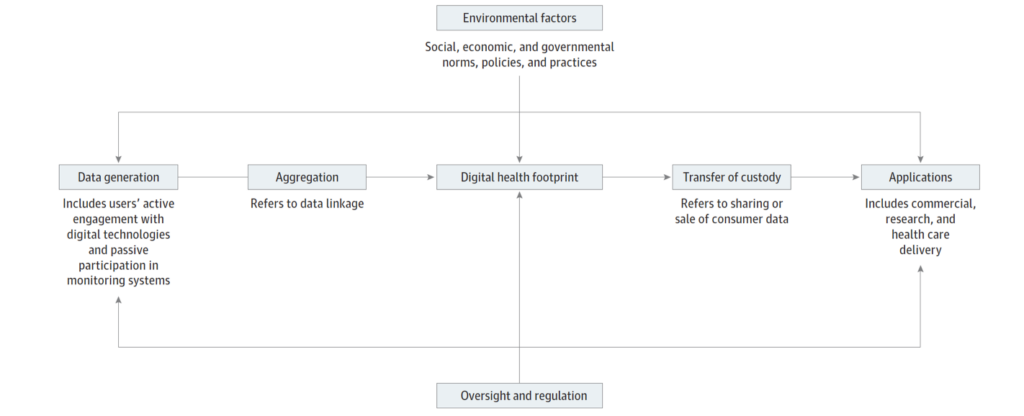

The “digital health footprint” is a useful construct. As individuals use data-generating technologies, they leave traces of themselves for data scientists to build life-like models of behavior and interests, which include inferences about health. Most consumer data are generated passively, without direct input or knowledge. The data are aggregated and linked across different databases and platforms, mined for insights, and then implemented in commercial, research, and health delivery settings. Data is often re-purposed in unexpected ways, far removed from its original intent. Government policy and social norms—such as filing taxes, texting, or banking—make some of this interaction effectively involuntary.

Our personal data are bought and sold without our knowledge or consent. Corporations and social media companies store personal browsing histories, online purchases, emails, and chat messages. Smart phone applications track location and contact networks. Data brokers merge digital data with other records—housing, criminal histories, employment—to generate individualized dossiers for purchase on the open, lightly regulated market. Data scientists mine millions of personal files for commercial insights. No industry—especially the $4 trillion American health care system—is immune.

In light of the Cambridge Analytica scandal, fake news, and a myriad of other controversies, more people have suddenly realized how unruly the market for personal data is. Calls to regulate “big tech” have grown, including in health care.

Looking ahead

What would better regulations entail? In Europe, the European Union General Data Protection Regulation establishes universal privacy rights across. It limits the immortality of data and requires a certain level of transparency in the collection and use of data. The new California Consumer Privacy Act empowers consumers to gain access to their personal data and refuse collection or commercialization of it. While the effects of these regulatory approaches need to be assessed, it is clear that a broader, universal regulations are required to answer a crucial question: who owns our data?

Both the privacy risks and potential benefits of health-related data are high. For example, real-time non-health data may alert providers of patients with suddenly elevated suicide risks. Smartly-deployed machine learning algorithms can help doctors identify patients at risk of short-term mortality in need of end-of-life counseling. Many biases in artificial intelligence can be addressed with technical fixes and better design. Integrating non-health data in to electronic medical records is a necessary step to addressing the social determinants of health. The upside is worth pursuing.

Some historical perspective is in order. The market for personal data is massive, but it is very young. In 2010, less than 35% of adults owned a smartphone. Today, 4 in 5 people have smartphones, and cellphone ownership is ubiquitous. The majority of hospitals and doctors did not adopt basic electronic health records until 2013. Today, the rate of EHR adoption in hospitals are over 95%. Digital data collection is everywhere, but its use is still novel. The technologies are not autonomous and self-aware. People and institutions design, build, and implement these tools. Regulators, policymakers, and the public can and should demand a more active say in how these tools are used.

The study, “Health Policy and Privacy Challenges Associated With Digital Technology” was published in JAMA Network Open on July 9, 2020. Authors include David Grande, Xochitl Luna Marti, Rachel Feuerstein-Simon, Raina M. Merchant, David A. Asch, Ashley Lewson, and Carolyn C. Cannuscio.