Briefing: The Impact of Repealing the Centers for Medicare and Medicaid Services Minimum Staffing Rule on Patient Outcomes

Presented to U.S. Senator Elizabeth Warren

News

One of the many — and perhaps most important — complex problems to be solved as artificial intelligence and machine learning (AI/ML) continue their penetration into all aspects of clinical medicine is the method of payment.

AI is now established as a virtual medical device and separate billable for clinicians and health systems. Since 2015, the US Food and Drug Administration (FDA) has cleared more than 200 AI devices for use in clinical medicine. Over the last two years, some commercial payers have begun reimbursing for certain AI applications on a per-use basis. The Centers for Medicare and Medicaid Services (CMS) has not issued formal guidance for the coverage of AI yet but is providing per-use reimbursement for eight AI diagnostic, imaging, and surgical applications.

This foundational trend of adopting a per-use payment policy in this emerging and very different new area of health care technology is something to be worried about, according to a new paper by Ravi Parikh, MD, MPP, LDI Senior Fellow and Associate Director at the Penn Center for Cancer Care Innovation (PC3I).

Published in the May issue of npj Digital Medicine, the commentary “Paying for Artificial Intelligence in Medicine,” notes that “experience with traditional medical devices has shown that per-use reimbursement may result in the overuse of AI.” Indeed, CMS’ own 2022 Physician Fee Schedule “acknowledges the possibility that AI poses a greater risk of over utilization than most devices and offers greater potential for fraud, waste, and abuse.”

The commentary discusses five alternative reimbursement approaches for health care-related AI:

• Offering no separate reimbursement for AI and only deploying it if health systems achieve added value and savings or increased revenue because of more efficient care and better outcomes.

• Value-based payments that reward demonstrated, positive effects of AI on quality metrics.

• Premarket advance market commitments to AI in response to specific care challenges, much like the XPrize Foundation’s model.

• Time-limited add-on reimbursements like CMS’s transitional drug add-on payment (TDAP) system meant to cover new pharmaceuticals not yet accounted for in bundled or episode-based payments.

• Reward interoperability and bias mitigation across multiple populations. Payers provide financial incentives for AI devices that demonstrate interoperability and applicability to multiple settings and patient groups in premarket testing.

The npj Digital Medicine commentary is the latest in a six-year string of papers and studies on medical algorithms that have made Parikh an emerging top authority on issues related to the clinical use of AI/ML. The work he is best known for during this period is focused on validating and implementing machine learning prognostic models into clinical care to improve supportive and end-of-life care. It has been one of the first examples of using machine learning-based behavioral strategies to improve care delivery and has resulted in positive end-of-life outcomes for Penn Medicine patients.

His interest in the AI/ML used in predictive analytics first came into focus with the 2016 publication of his Journal of the American Medical Association (JAMA) Viewpoint piece, “Integrating Predictive Analytics Into High-Value Care.” That same year, the FDA issued its first approval on an AI medical device.

In April 2017 Parikh co-authored with J. Sanford Schwartz and Amol Navathe a New England Journal of Medicine piece entitled “Beyond Genes and Molecules — A Precision Delivery Initiative for Precision Medicine.” It critiqued the federal grant policy that heavily favored genetic and molecular-related research to boost its precision medicine initiative rather than the rapidly evolving field of AI.

That year, Parikh was part of a research team conducting a study that harnessed a self-learning algorithm to the electronic health records of 26,000 individuals to create a tool that was subsequently able to accurately identify cancer patients at high risk of dying shortly after chemotherapy was started. The paper “Development and Application of a Machine Learning Approach to Assess Short-term Mortality Risk Among Patients with Cancer Starting Chemotherapy,” was published in JAMA in the October 2018 issue.

In early 2019, Parikh co-authored with Amol Navathe a Policy Forum piece in Science magazine calling for the FDA to tighten its regulatory standards in a manner that approximated those now used for drugs and devices.

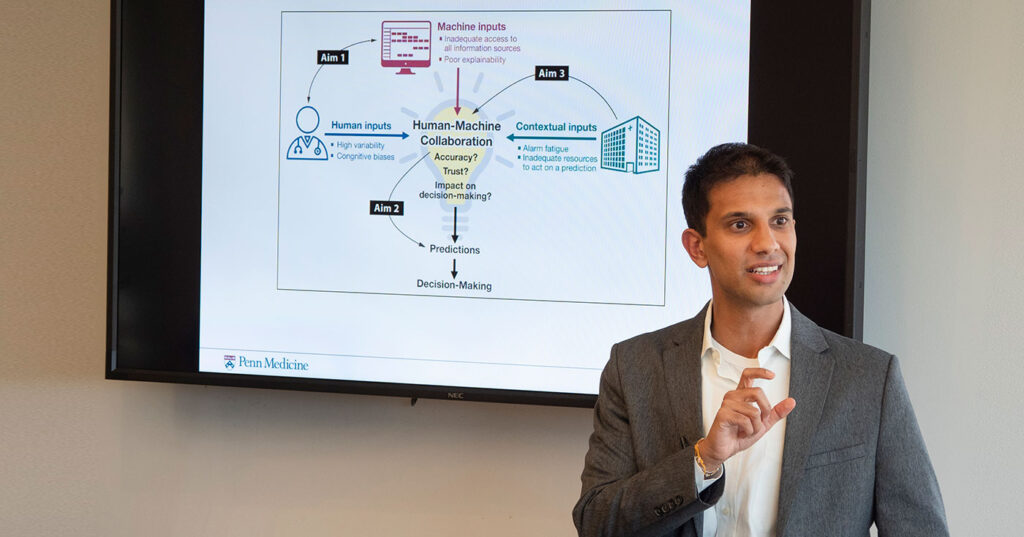

Later in 2019, in a seminar at the University of Pennsylvania Abramson Cancer Center’s PC3I, Parikh recapped his ongoing research exploring how advanced machine learning systems might work as true partners with humans. The idea being that both parties — human clinician and self-learning algorithm — would develop unique insights into a patient’s condition and collaboratively reach the decisions most likely to improve the outcome. The topic of human-machine collaboration was the subject of Parikh’s successful National Cancer Institute Mentored Clinician Scientist Research Career Development Award (K08).

In a November 2019 Perspective with Amol Navathe and Stephanie Teeple in the Journal of the American Medical Association, Parikh took aim at AI/ML bias. As concern about the social biases “baked into” medical algorithms gained wider attention, his JAMA Viewpoint piece proposed that AI techniques themselves could be used to both identify bias in health care-related AI devices and help identify interventions to correct existing biases in ongoing clinician decision making.

Throughout 2020 Parikh, who is also a Staff Physician at Philadelphia’s Corporal Michael J. Crescenz VA Medical Center, co-led a Penn research team with Amol Navathe and Kristin Linn exploring whether a machine-learning algorithm could be used to identify previously unidentifiable subgroups of high-risk VA patients. The team succeeded in pioneering a method that informed new kinds of targeted treatment strategies. The paper was published in February 2021 in PLOS ONE as “A Machine Learning Approach to Identify Distinct Subgroups of Veterans at Risk for Hospitalization or Death Using Administrative and Electronic Health Record Data.”

In 2021, Parikh with George Maliha looked at the dark side of AI and its potential for going wrong in ways that cause medical injury and legal liability. The Perspective piece in the April 2021 Milbank Quarterly noted: “Despite their promise, AI/ML algorithms have come under scrutiny for inconsistent performance, particularly among minority communities… Algorithm inaccuracy may lead to suboptimal clinical decision making and adverse patient outcomes.”

The Milbank Quarterly piece went on to explain “Physicians have a duty to independently apply the standard of care for their field, regardless of an AI/ML algorithm output. While case law on physician use of AI/ML is not yet well developed, several lines of cases suggest that physicians bear the burden of errors resulting from AI/ML output.”

In a June 2021 “Machine Learning 101” panel convened by the American Medical Association for medical students, Parikh advised the audience that algorithm-generated results should be viewed as simply one of many data points a physician considers along with other things like lab results and “how the patient looks in front of you.”

In another study published in npj Digital Medicine in July 2021, Parikh detailed medical situations in which AI/ML approaches are of little use. It found that certain patients whose mortality risk rises only close to death may not benefit from the integration of prognostic algorithms into their care.

In a National Library of Medicine National Center for Biotechnology Information preprint posted earlier this year, Parikh analyzes AI/ML systems performance during the pandemic. Because the COVID-19 crisis caused drastic changes and disruptions in every part of health care delivery and its record systems, the study looked at how machine learning systems that “feed” on this big data were affected. Entitled “Pandemic-Related Decreases in Care Utilization May Negatively Impact the Performance of Clinical Predictive Algorithms and Warrant Assessment and Possible Retraining of Such Algorithms,” the paper reported that across the arc of the pandemic, self-learning algorithms experienced a downward “performance drift.”

In a May 2022 paper in the journal Supportive Care in Cancer with Judy Shea, Parikh led a qualitative study of oncologists’ personal feelings about the integration of machine learning prognoses in their practices. He found those clinicians concerned about the accuracy and potential bias of predictive algorithms and worried they may foster reliance on machine prediction rather than clinical intuition.

Presented to U.S. Senator Elizabeth Warren

Policymakers Should Consider Reporting and Planning Procedures That Do Not Involve Child Protective Services

The Braidwood Case Opens the Door For Others To Block PrEP and other Preventive Services

It’s the First National Database to Measure the Impact of Environmental Factors on Health

Changing the Health Care Reimbursement Model Could Improve Quality and Lower Costs

Home-Based Programs Hold Promise to Improve Their Health