Loss of Vital Monitoring System Threatens Nation’s Health

Without Pressure From Congress, NHANES — Which Helped Uncover High Levels of Childhood Lead, Nutritional Deficiencies, and Forever Chemicals — Will Cease to Exist

Population Health

Brief

Key Findings

Deaths among women and children in low- and middle-income countries fell significantly with government-led cash transfer programs, LDI studies in Nature and The Lancet show. Cash transfer programs, which provide direct financial support to people living in poverty, are an increasingly common anti-poverty tool. Using data from 37 low- and middle-income countries, the research provides the first evidence that these programs substantially reduce mortality rates population-wide. This impact is due to better maternal and reproductive health, childhood nutrition, and vaccination rates when households have more resources.

“We hypothesized that the programs, which historically have been seen as anti-poverty initiatives, can be strong levers for improving population health,” said LDI Senior Fellow Aaron Richterman. The study was co-authored with Senior Fellows Harsha Thirumurthy and Jere Behrman, and Statistical Analyst Elizabeth Bair. The reasoning was based on their work showing that cash transfer programs lead to population-wide reductions in sexually transmitted infections, HIV diagnoses, and AIDS-related deaths.

The new studies analyzed nearly 20 years of survey data, from 2000 to 2019, from more than 7 million people. Cash transfer programs in the studies covered approximately 25% of a country’s population, and 50% of programs provided unconditional support to recipients who met means-testing criteria. Using statistical methods to estimate causal effects and comparing health outcome trends for countries with and without cash transfer programs, the researchers found that the programs reduce population-level mortality rates and improve multiple health outcomes and behaviors.

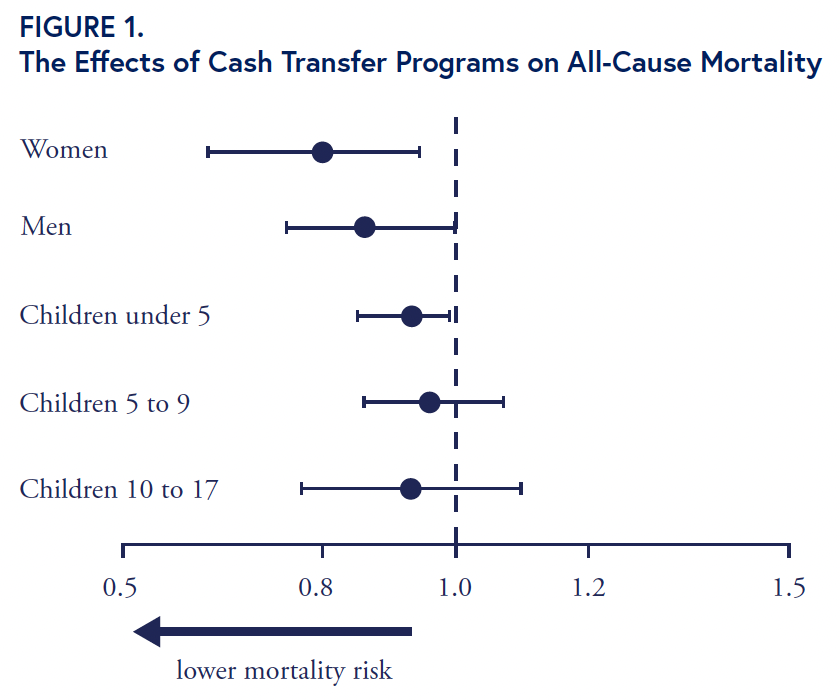

With cash transfer programs, the risk of all-cause death fell 20% among women aged 18 years or older and 8% among children younger than 5 (Figure 1). These results translate annually to approximately one life saved per 1,000 women and two lives saved per 1,000 children. Mortality risk also dropped for men, but the effect was not statistically significant at conventional levels. Mortality rates declined within two years of the introduction of the cash transfer programs and fell further over time, the Nature study found.

Additional findings suggested a dose-response effect: Mortality reductions were larger in countries with cash transfer programs that covered a greater share of the population and provided larger payments. Thus, Thirumurthy said, programs that “go big” in coverage or cash transfer amounts will likely have a stronger population-health impact.

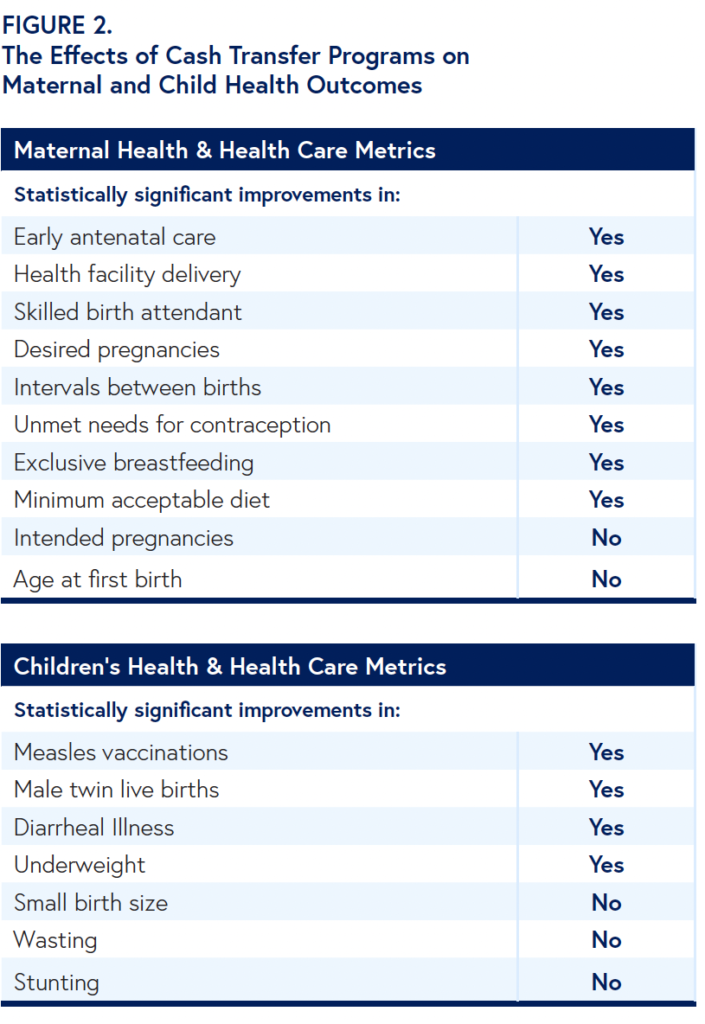

How do cash transfer programs reduce all-cause mortality rates? In The Lancet, the research team reported statistically significant improvements in key indicators that countries use to track progress in health, sustainability, and development, particularly in women’s reproductive health and children’s health (Figure 2).

With cash transfer programs, pregnant women were more likely to receive early antenatal care, deliver in a health care facility, and have a skilled birth attendant at delivery. Women reported more pregnancies that were wanted and longer intervals between births. Most of these factors were linked to lower infant mortality rates. Women had fewer unmet needs for contraception and were more likely to practice exclusive breastfeeding and provide their children with a minimum acceptable diet.

Cash transfer programs led to improvements in children’s measles vaccination rates, diarrheal illnesses, and a lower likelihood of being underweight. Outcomes that did not significantly change were pregnancies reported to be intended, a woman’s age at first birth, small birth size, and childhood wasting or stunting.

Nearly 700 million people worldwide live in extreme poverty (defined as living on $2 a day) a number that grew by 97 million during the COVID-19 pandemic. Cash transfer programs also expanded during this time: About 70% of programs documented by the World Bank in 2022 started under the pandemic, covering nearly 1.4 billion people.

Launching cash transfer programs is a wise decision, the LDI studies show, because the programs improve the health of the entire population. Reasons for the widespread benefits include reductions in infectious diseases and economic spillover effects as beneficiaries share resources and increase spending within their communities.

“The evidence is now hard to ignore,” Thirumurthy said. “When governments in low- and middle-income countries put cash directly into the hands of low-income families, people live longer, healthier lives. Cash transfers work — and they’re one of the smartest investments we can make in population health.”

The research team seeks to partner with local and national governments to design and evaluate new programs, particularly basic income grants that provide sizable cash transfers for the poorest segments of the population. The researchers plan to analyze how cash transfer programs affect mental health conditions and noncommunicable diseases such as hypertension, diabetes, and high cholesterol.

They are exploring new approaches to estimate the overall economic returns on investments in cash transfer programs. More granular analyses will examine how transfer amounts, population coverage, eligibility requirements, and other program characteristics influence overall effectiveness.

Both studies used the authors’ database on government-led cash transfer programs and Demographic and Health Surveys from households in 37 low- and middle-income countries in Africa, Latin America, Southeast Asia, and the Caribbean, roughly half with and half without cash-transfer programs. Limitations included self-reported data from samples representing primarily younger, reproductive-age females. Outcomes for 17 metrics of maternal and children’s health were based on data from more than 2.1 million live births and more than 950,000 children under age 5 reported by survey respondents.

Richterman, Aaron, Tra-My Ngoc Bùi, Elizabeth F. Bair, Gregory Jerome, Christophe Millien, Jean Christophe Dimitri Suffrin, Jere R. Behrman, and Harsha Thirumurthy. “The Effects of Government-led Cash Transfer Programmes on Behavioural and Health Determinants of Mortality: A Difference-in-differences Study.” The Lancet 406, no. 10520 (November 11, 2025): 2656–66. https://doi.org/10.1016/s0140-6736(25)01437-0.

Richterman, Aaron, Christophe Millien, Elizabeth F. Bair, Gregory Jerome, Jean Christophe Dimitri Suffrin, Jere R. Behrman, and Harsha Thirumurthy. “The Effects of Cash Transfers on Adult and Child Mortality in Low- and Middle-income Countries.” Nature 618, no. 7965 (May 31, 2023): 575–82. https://doi.org/10.1038/s41586-023-06116-2.

Without Pressure From Congress, NHANES — Which Helped Uncover High Levels of Childhood Lead, Nutritional Deficiencies, and Forever Chemicals — Will Cease to Exist

A Multi-State Study Finds That Parents Often Travel 60+ Miles—With Distance, Insurance, and Race Driving Gaps in Maternal Care

Cheaper Housing Could be a Way to Lower Hospitalizations Among Medicaid Patients with Heart Failure

Pa.’s New Bipartisan Tax Credit is Designed to be Simple and Refundable – Reflecting Core Points From Penn LDI Researchers Who Briefed State Leaders

Announcing Bold New Goals While Crippling the Infrastructure Needed to Achieve Them

Promising New Evidence and What’s Next